How I Learned To Stop Worrying And Love Multimedia Writing

Prior to the World Wide Web, the act of writing remained consistent for centuries. Words were put on paper, and occasionally, people would read them. The tools might change — quills, printing presses, typewriters, pens, what have you — and an adventurous author may perhaps throw in imagery to compliment their copy.

We all know that the web shook things up. With its arrival, writing could become interactive and dynamic. As web development progressed, the creative possibilities of digital content grew — and continue to grow — exponentially. The line between web writing and web technologies is blurry these days, and by and large, I think that’s a good thing, though it brings its own challenges. As a sometimes-engineer-sometimes-journalist, I straddle those worlds more than most and have grown to view the overlap as the future.

Writing for the web is different from traditional forms of writing. It is not a one-size-fits-all process. I’d like to share the benefits of writing content in digital formats like MDX using a personal project of mine as an example. And, by the end, my hope is to convince you of the greater writing benefits of MDX over more traditional formats.

A Little About MarkdownAt its most basic, MDX is Markdown with components in it. For those not in the know, Markdown is a lightweight markup language created by John Gruber in 2003, and it’s everywhere today. GitHub, Trello, Discord — all sorts of sites and services use it. It’s especially popular for authoring blog posts, which makes sense as blogging is very much the digital equivalent of journaling. The syntax doesn’t “get in the way,” and many content management systems support it.

Markdown’s goal is an “easy-to-read and easy-to-write plain text format” that can readily be converted into XHTML/HTML if needed. Since its inception, Markdown was supposed to facilitate a writing workflow that integrated the physical act of writing with digital publishing.

We’ll get to actual examples later, but for the sake of explanation, compare a block of text written in HTML to the same text written in Markdown.

HTML is a pretty legible format as it is:

<h2>Post Title</h2>

<p>This is an example block of text written in HTML. We can link things up like this, or format the code with <strong>bolding</strong> and <em>italics</em>. We can also make lists of items:</p>

<ul>

<li>Like this item<li>

<li>Or this one</li>

<li>Perhaos a third?</li>

</ul>

<img src="image.avif" alt="And who doesn't enjoy an image every now and then?">

But Markdown is somehow even less invasive:

## Post Title

This is an example block of text written in HTML. We can link things up like this or format the code with **bolding** and *italics*. We can also make lists of items:

- Like this item

- Or this one

- Perhaos a third?

I’ve become a Markdown disciple since I first learned to code. Its clean and relatively simple syntax and wide compatibilities make it no wonder that Markdown is as pervasive today as it is. Having structural semantics akin to HTML while preserving the flow of plain text writing is a good place to be.

However, it could be accused of being a bit too clean at times. If you want to communicate with words and images, you’re golden, but if you want to jazz things up, you’ll find yourself looking further afield for other options.

Gruber set out to create a “format for writing for the web,” and given its ongoing popularity, you have to say he succeeded, yet the web 20 years ago is a long way away from what it is today.

This is the all-important context for what I want to discuss about MDX because MDX is an offshoot of Markdown, only more capable of supporting richer forms of multimedia — and even user interaction. But before we get into that, we should also discuss the concept of web components because that’s the second significant piece that MDX brings to the table.

Further Reading

- “Thoughts On Markdown” by Knut Melvær

- “Improving The Accessibility Of Your Markdown” by Eric Bailey

The move towards richer multimedia websites and apps has led to a thriving ecosystem of web development frameworks and libraries, including React, Vue, Svelte, and Astro, to name a few. The idea that we can have reusable components that are not only interactive but also respond to each other has driven this growth and continues to push on evolving web platform features like web components.

MDX is like a bridge that connects Markdown with modern web tooling. Simply put, MDX weds Markdown’s simplicity with the creative possibilities of modern web frameworks.

By leaning into the overlaps rather than trying to abstract them away at all costs, we find untold potential for beautiful digital content.

Further Reading

- “The Key To Good Component Design Is Selfishness” by Daniel Yuschick

- “Developer Decisions For Building Flexible Components” by Michelle Barker

- “When CSS Isn’t Enough: JavaScript Requirements For Accessible Components” by Stephanie Eckles

- “A Complete Guide To Accessible Front-End Components” by Vitaly Friedman

My own experience with MDX took shape in a side project of mine: teeline.online. To cut a long story short, before I was a software engineer, I was a journalist, and part of my training involved learning a type of shorthand called Teeline. What it boils down to is ripping out as many superfluous letters as possible — I like to call this process “disemvowelment” — then using Teeline’s alphabet to write the remaining content. This has allowed people like me to write lots of words very quickly.

During my studies, I found online learning resources lacking, so as my engineering skills improved, I started working on the kind of site I’d have used when I was a student if it was available. Hence, teeline.online.

I built the teeling.online site with the Svelte framework for its components. The site’s centerpiece is a dataset of shorthand characters and combinations with which hundreds of outlines can be rendered, combined, and animated as SVG paths.

Likewise, Teeline’s “disemvowelment” script could be wired into a single component that I could then use as many times as I like.

Then, of course, as is only natural when working with components, I could combine them to show the Teeline evolution that converts longhand words into shorthand outlines.

The Markdown, meanwhile, looks as simple as this:

It’s not exactly the sort of complex codebase you might expect for an app. Meanwhile, the files themselves can sit in a nice, tidy directory of their own:

The syllabus is neatly filed away in its own folder. With a bit of metadata sprinkled in, I have everything I need to render an entire section of the site using routing. The setup feels like a fluid medium between worlds. If you want to write with words and pictures, you can. If an idea comes to mind for a component that would better express what you’re going for, you can go make it and drop it in.

In fairness, a “WordToOutline” component like this might not mean much to Teeline newcomers, though with such a clear connection between the Markdown and the rendered pages, it’s not much of a stretch to work out what it is. And, of course, there’s always the likes of services like Storybook that can be used to organize component libraries as they grow.

The raw form of multimedia content can be pretty unsightly — something that needs to be kept at arm’s length by content management systems. With MDX — and its ilk — the content feels rather friendly and legible.

Benefits

I think you can start to see some of the benefits of an MDX setup like this. There are two key benefits in particulart that I think are worth calling out.

Editorial Benefits

First and foremost, MDX doesn’t distract the writing and editorial flow of working with content. When we’re working with traditional code languages, even HTML, the code format is convoluted with things like opening and closing tags. And it’s even more convoluted if we need the added complexity of embedding components in the content.

MDX (and Markdown, for that matter) is much less verbose. Content is a first-class citizen that takes up way less space than typical markup, making it clear and legible. And where we need the complex affordance of components, those can be dropped in without disrupting that nice editorial experience.

Another key benefit of using MDX is reusability. If, for example, I want to display the same information as images instead, each image would have to be bespoke. But we all know how inefficient it is to maintain content in raster images — it requires making edits in a completely different application, which is highly inconvenient. With an old-school approach, if I update the design of the site, I’m left having to create dozens of images in the new style.

With MDX (or an equivalent like MDsveX), I only need to make the change once, and it updates everywhere. Having done the leg work of building reusable components, I can weave them throughout the syllabus as I see fit, safe in the knowledge that updates will roll out across the board — and do it without affecting the editorial experience whatsoever.

Consider the time it would take to create images or videos representing the same thing. Over time, using fixed assets like images becomes a form of technical — or perhaps editorial — debt that adds up over time, while a multimedia approach that leans into components proves to be faster and more flexible than vanilla methods.

Tech Benefits

I just made the point that working with reusable components in MDX allows Markdown content to become more robust without affecting the content’s legibility as we author it. Using Svelte’s version of MDX, MDsveX, I was able to combine the clean, readable conventions of Markdown with the rich, interactive potential of components.

Caveats

It’s only right that all my gushing about MDX and its benefits be tempered with a reality check or two. Like anything else, MDX has its limitations, and your mileage with it will vary.

That said, I believe that those limitations are likely to show up when MDX is perhaps not the best choice for a particular project. There’s a sweet spot that MDX fills and it’s when we need to sprinkle in additional web functionality to the content. We get the best of two worlds: minimal markup and modern web features.

But if components aren’t needed, MDX is overkill when all you need is a clean way to write content that ports nicely into HTML to be consumed by whatever app or platform you use to display it on the web.

Without components, MDX is akin to caring for a skinned elbow with a cast; it’s way more than what’s needed in that situation, and the returns you get from Markdown’s legibility will diminish.

Similarly, if your technical needs go beyond components, you may be looking at a more complex architecture than what MDX can support, and you would be best leaning into what works best for content in the particular framework or stack you’re using.

Code doesn’t age as well as words or images do. An MDX-esque approach does sign you up for the maintenance work of dependency updates, refactoring, and — god forbid — framework migrations. I haven’t had to face the last of those realities yet, though I’d say the first two are well worth it. Indeed, they’re good habits to keep.

Key Takeaways

Writing with MDX continues to be a learning experience for me, but it’s already made a positive impact on my editorial work.

Specifically, I’ve found that MEX improves the quality of my writing. I think more laterally about how to convey ideas.

Is what I’m saying best conveyed in words, an image, or a data visualization? Perhaps an interactive game?

There is way more potential to enhance my words with componentry than I would get with Markdown alone, opening more avenues for what I can say and how I say it.

Of course, those components do not come for free. MDX does sign you up to build those, regardless of whether you have a set of predefined components included in your framework. At the same time, I’d argue that the opportunities MDX opens up for writing greatly outweigh having to build or maintain a few components.

If MDX had been around in the age of Leonardo Di Vinci, perhaps he may have reached for MDX in his journals. I know I’m taking a great leap of assumption here, but the complexity of what he was writing and trying to describe in technical terms with illustrations would have benefited greatly from MDX for everything from interactive demos of his ideas to a better writing experience overall.

Further Reading

- “The Power of Pen and Paper Sketching” by Tracy Osborne

- “What Leonardo Da Vinci Can Teach Us About Web Design” by Frederick O’Brien

In many respects, MDX’s rich, varied way of approaching content is something that Markdown — and writing for the web in general — encourages already. We don’t think only in terms of words but of links, images, and semantic structure. MDX and its equivalents merely take the lid off the cookie jar so we can enhance our work.

Wouldn’t it be nice if… is a redundant turn of phrase on the web. There may be technical hurdles — or, in my case, skill and knowledge hurdles — but it’s a buzz to think about ways in which your thoughts can best manifest on screen.

At the same time, the simplicity of Markdown is so unintrusive. If someone wants to write content formatted in vanilla Markdown, it’s totally possible to do that without trading up to MDX.

Just having the possibility of bespoke multimedia content is enough to change the creative process. It leaves you using words because you want to, not because you have to.

Why describe the solar system when you can render an explorable one? Why have a picture of a proposed skyscraper when you can display a 3D model? Writing with MDX (or, more accurately, MDsveX) has changed my entire thought process. Potential answers to the question, How do I best get this across?, become more expansive.

As You Please

Good things happen when worlds collide. New possibilities emerge when seemingly disparate things come together. Many content management systems shield writers — and writing — from code. To my mind, this is like shielding painters from wider color palettes, chefs from exotic ingredients, or sculptors from different types of tools.

Leaning into the overlap between writing and coding gets us closer to one of the web’s great joys: if you can imagine it, you can probably do it.

Uniting Web And Native Apps With 4 Unknown JavaScript APIs

A couple of years ago, four JavaScript APIs that landed at the bottom of awareness in the State of JavaScript survey. I took an interest in those APIs because they have so much potential to be useful but don’t get the credit they deserve. Even after a quick search, I was amazed at how many new web APIs have been added to the ECMAScript specification that aren’t getting their dues and with a lack of awareness and browser support in browsers.

That situation can be a “catch-22”:

An API is interesting but lacks awareness due to incomplete support, and there is no immediate need to support it due to low awareness.

Most of these APIs are designed to power progressive web apps (PWA) and close the gap between web and native apps. Bear in mind that creating a PWA involves more than just adding a manifest file. Sure, it’s a PWA by definition, but it functions like a bookmark on your home screen in practice. In reality, we need several APIs to achieve a fully native app experience on the web. And the four APIs I’d like to shed light on are part of that PWA puzzle that brings to the web what we once thought was only possible in native apps.

You can see all these APIs in action in this demo as we go along.

1. Screen Orientation APIThe Screen Orientation API can be used to sniff out the device’s current orientation. Once we know whether a user is browsing in a portrait or landscape orientation, we can use it to enhance the UX for mobile devices by changing the UI accordingly. We can also use it to lock the screen in a certain position, which is useful for displaying videos and other full-screen elements that benefit from a wider viewport.

Using the global screen object, you can access various properties the screen uses to render a page, including the screen.orientation object. It has two properties:

type: The current screen orientation. It can be:"portrait-primary","portrait-secondary","landscape-primary", or"landscape-secondary".angle: The current screen orientation angle. It can be any number from 0 to 360 degrees, but it’s normally set in multiples of 90 degrees (e.g.,0,90,180, or270).

On mobile devices, if the angle is 0 degrees, the type is most often going to evaluate to "portrait" (vertical), but on desktop devices, it is typically "landscape" (horizontal). This makes the type property precise for knowing a device’s true position.

The screen.orientation object also has two methods:

.lock(): This is an async method that takes atypevalue as an argument to lock the screen..unlock(): This method unlocks the screen to its default orientation.

And lastly, screen.orientation counts with an "orientationchange" event to know when the orientation has changed.

Browser Support

Finding And Locking Screen Orientation

Let’s code a short demo using the Screen Orientation API to know the device’s orientation and lock it in its current position.

This can be our HTML boilerplate:

<main>

<p>

Orientation Type: <span class="orientation-type"></span>

<br />

Orientation Angle: <span class="orientation-angle"></span>

</p>

<button type="button" class="lock-button">Lock Screen</button>

<button type="button" class="unlock-button">Unlock Screen</button>

<button type="button" class="fullscreen-button">Go Full Screen</button>

</main>

On the JavaScript side, we inject the screen orientation type and angle properties into our HTML.

let currentOrientationType = document.querySelector(".orientation-type");

let currentOrientationAngle = document.querySelector(".orientation-angle");

currentOrientationType.textContent = screen.orientation.type;

currentOrientationAngle.textContent = screen.orientation.angle;

Now, we can see the device’s orientation and angle properties. On my laptop, they are "landscape-primary" and 0°.

If we listen to the window’s orientationchange event, we can see how the values are updated each time the screen rotates.

window.addEventListener("orientationchange", () => {

currentOrientationType.textContent = screen.orientation.type;

currentOrientationAngle.textContent = screen.orientation.angle;

});

To lock the screen, we need to first be in full-screen mode, so we will use another extremely useful feature: the Fullscreen API. Nobody wants a webpage to pop into full-screen mode without their consent, so we need transient activation (i.e., a user click) from a DOM element to work.

The Fullscreen API has two methods:

Document.exitFullscreen()is used from the global document object,Element.requestFullscreen()makes the specified element and its descendants go full-screen.

We want the entire page to be full-screen so we can invoke the method from the root element at the document.documentElement object:

const fullscreenButton = document.querySelector(".fullscreen-button");

fullscreenButton.addEventListener("click", async () => {

// If it is already in full-screen, exit to normal view

if (document.fullscreenElement) {

await document.exitFullscreen();

} else {

await document.documentElement.requestFullscreen();

}

});

Next, we can lock the screen in its current orientation:

const lockButton = document.querySelector(".lock-button");

lockButton.addEventListener("click", async () => {

try {

await screen.orientation.lock(screen.orientation.type);

} catch (error) {

console.error(error);

}

});

And do the opposite with the unlock button:

const unlockButton = document.querySelector(".unlock-button");

unlockButton.addEventListener("click", () => {

screen.orientation.unlock();

});

Can’t We Check Orientation With a Media Query?

Yes! We can indeed check page orientation via the orientation media feature in a CSS media query. However, media queries compute the current orientation by checking if the width is “bigger than the height” for landscape or “smaller” for portrait. By contrast,

The Screen Orientation API checks for the screen rendering the page regardless of the viewport dimensions, making it resistant to inconsistencies that may crop up with page resizing.

You may have noticed how PWAs like Instagram and X force the screen to be in portrait mode even when the native system orientation is unlocked. It is important to notice that this behavior isn’t achieved through the Screen Orientation API, but by setting the orientation property on the manifest.json file to the desired orientation type.

Another API I’d like to poke at is the Device Orientation API. It provides access to a device’s gyroscope sensors to read the device’s orientation in space; something used all the time in mobile apps, mainly games. The API makes this happen with a deviceorientation event that triggers each time the device moves. It has the following properties:

event.alpha: Orientation along the Z-axis, ranging from 0 to 360 degrees.event.beta: Orientation along the X-axis, ranging from -180 to 180 degrees.event.gamma: Orientation along the Y-axis, ranging from -90 to 90 degrees.

Browser Support

Moving Elements With Your Device

In this case, we will make a 3D cube with CSS that can be rotated with your device! The full instructions I used to make the initial CSS cube are credited to David DeSandro and can be found in his introduction to 3D transforms.

To rotate the cube, we change its CSS transform properties according to the device orientation data:

const currentAlpha = document.querySelector(".currentAlpha"); const currentBeta = document.querySelector(".currentBeta"); const currentGamma = document.querySelector(".currentGamma"); const cube = document.querySelector(".cube"); window.addEventListener("deviceorientation", (event) => { currentAlpha.textContent = event.alpha; currentBeta.textContent = event.beta; currentGamma.textContent = event.gamma; cube.style.transform =rotateX(${event.beta}deg) rotateY(${event.gamma}deg) rotateZ(${event.alpha}deg); });

This is the result:

Let’s turn our attention to the Vibration API, which, unsurprisingly, allows access to a device’s vibrating mechanism. This comes in handy when we need to alert users with in-app notifications, like when a process is finished or a message is received. That said, we have to use it sparingly; no one wants their phone blowing up with notifications.

There’s just one method that the Vibration API gives us, and it’s all we need: navigator.vibrate().

vibrate() is available globally from the navigator object and takes an argument for how long a vibration lasts in milliseconds. It can be either a number or an array of numbers representing a patron of vibrations and pauses.

navigator.vibrate(200); // vibrate 200ms

navigator.vibrate([200, 100, 200]); // vibrate 200ms, wait 100, and vibrate 200ms.

Browser Support

Vibration API Demo

Let’s make a quick demo where the user inputs how many milliseconds they want their device to vibrate and buttons to start and stop the vibration, starting with the markup:

<main>

<form>

<label for="milliseconds-input">Milliseconds:</label>

<input type="number" id="milliseconds-input" value="0" />

</form>

<button class="vibrate-button">Vibrate</button>

<button class="stop-vibrate-button">Stop</button>

</main>

We’ll add an event listener for a click and invoke the vibrate() method:

const vibrateButton = document.querySelector(".vibrate-button");

const millisecondsInput = document.querySelector("#milliseconds-input");

vibrateButton.addEventListener("click", () => {

navigator.vibrate(millisecondsInput.value);

});

To stop vibrating, we override the current vibration with a zero-millisecond vibration.

const stopVibrateButton = document.querySelector(".stop-vibrate-button");

stopVibrateButton.addEventListener("click", () => {

navigator.vibrate(0);

});

In the past, it used to be that only native apps could connect to a device’s “contacts”. But now we have the fourth and final API I want to look at: the Contact Picker API.

The API grants web apps access to the device’s contact lists. Specifically, we get the contacts.select() async method available through the navigator object, which takes the following two arguments:

properties: This is an array containing the information we want to fetch from a contact card, e.g.,"name","address","email","tel", and"icon".options: This is an object that can only contain themultipleboolean property to define whether or not the user can select one or multiple contacts at a time.

Browser Support

I’m afraid that browser support is next to zilch on this one, limited to Chrome Android, Samsung Internet, and Android’s native web browser at the time I’m writing this.

Selecting User’s Contacts

We will make another demo to select and display the user’s contacts on the page. Again, starting with the HTML:

<main>

<button class="get-contacts">Get Contacts</button>

<p>Contacts:</p>

<ul class="contact-list">

<!-- We’ll inject a list of contacts -->

</ul>

</main>

Then, in JavaScript, we first construct our elements from the DOM and choose which properties we want to pick from the contacts.

const getContactsButton = document.querySelector(".get-contacts");

const contactList = document.querySelector(".contact-list");

const props = ["name", "tel", "icon"];

const options = {multiple: true};

Now, we asynchronously pick the contacts when the user clicks the getContactsButton.

const getContacts = async () => {

try {

const contacts = await navigator.contacts.select(props, options);

} catch (error) {

console.error(error);

}

};

getContactsButton.addEventListener("click", getContacts);

Using DOM manipulation, we can then append a list item to each contact and an icon to the contactList element.

const appendContacts = (contacts) => { contacts.forEach(({name, tel, icon}) => { const contactElement = document.createElement("li"); contactElement.innerText =${name}: ${tel}; contactList.appendChild(contactElement); }); }; const getContacts = async () => { try { const contacts = await navigator.contacts.select(props, options); appendContacts(contacts); } catch (error) { console.error(error); } }; getContactsButton.addEventListener("click", getContacts);

Appending an image is a little tricky since we will need to convert it into a URL and append it for each item in the list.

const getIcon = (icon) => { if (icon.length > 0) { const imageUrl = URL.createObjectURL(icon[0]); const imageElement = document.createElement("img"); imageElement.src = imageUrl; return imageElement; } }; const appendContacts = (contacts) => { contacts.forEach(({name, tel, icon}) => { const contactElement = document.createElement("li"); contactElement.innerText =${name}: ${tel}; contactList.appendChild(contactElement); const imageElement = getIcon(icon); contactElement.appendChild(imageElement); }); }; const getContacts = async () => { try { const contacts = await navigator.contacts.select(props, options); appendContacts(contacts); } catch (error) { console.error(error); } }; getContactsButton.addEventListener("click", getContacts);

And here’s the outcome:

Note: The Contact Picker API will only work if the context is secure, i.e., the page is served over https:// or wss:// URLs.

There we go, four web APIs that I believe would empower us to build more useful and robust PWAs but have slipped under the radar for many of us. This is, of course, due to inconsistent browser support, so I hope this article can bring awareness to new APIs so we have a better chance to see them in future browser updates.

Aren’t they interesting? We saw how much control we have with the orientation of a device and its screen as well as the level of access we get to access a device’s hardware features, i.e. vibration, and information from other apps to use in our own UI.

But as I said much earlier, there’s a sort of infinite loop where a lack of awareness begets a lack of browser support. So, while the four APIs we covered are super interesting, your mileage will inevitably vary when it comes to using them in a production environment. Please tread cautiously and refer to Caniuse for the latest support information, or check for your own devices using WebAPI Check.

Introducing Search & Replace Everything by WPCode: Bulk Editing in WordPress Made Easy

Have you ever wanted to make bulk updates to your WordPress site?

Wouldn’t it be nice if you could update hundreds of posts with a single click… without having to update them manually?

If you’re like me and most other smart website owners, then you have at least wished for this solution a couple of times in your WordPress journey.

Today, I am excited to announce the new Search & Replace Everything by WPCode, a free tool to easily perform bulk search and replace operations in WordPress.

Why Did We Build This Tool?

By default, WordPress does not come with a Find and Replace tool. This makes it hard to do bulk updates on your site.

Especially if you want to quickly update a link on every page, change an image that’s used in multiple areas, or making bulk changes when you’re moving your site.

Website owners either have to update every page manually which is extremely inefficient and time-consuming, or hire a developer to write a SQL query which can be expensive.

And that’s why I decided to create Search & Replace Everything by WPCode.

Search & Replace Everything revolutionizes how you update your content on your site once and for all.

This tool is designed for anyone who manages a WordPress site and wants to save time and avoid errors.

Here are some of the top use cases:

- Bulk Update Content in WordPress Posts: As the plugin’s name suggests, you can search and replace any content on your website with a single click.

- Replace an Image Used Across Multiple Locations: Quickly replace outdated images anywhere on your site with the new ones with just a click.

- Updating URLs After WordPress Migration: When you migrate a WordPress website to a new address, you can replace URLs pointing to the old address. The plugin helps you fix all broken links while also saving you time.

Making Bulk Changes in WordPress Effortlessly

With Search & Replace Everything, our goal is to make it easy to make bulk changes to your website.

Instead of writing complex SQL queries on your own or hiring a developer, you can enter what you want to search for and what you need to replace it with.

Let me show you what makes Search & Replace Everything incredibly powerful yet so simple.

1. Update Everything Quick and Easy

Search & Replace Everything comes with a clean user interface. Just go to the Tools » WP Search & Replace page, enter the content you want to find, and then add the content you want to replace it with.

This simple layout ensures that even non-technical users can perform complex operations without hassle.

2. Control Where to Search

Target your changes precisely by selecting specific database tables or searching across all tables for comprehensive updates.

This feature ensures you’re making changes exactly where needed, preventing any unintended modifications.

3. Precision Search with Case Sensitivity

By default, the plugin performs case-sensitive searches, ensuring accurate and specific matches.

For example, a search for “WordPress” will not match “wordpress” or “WORDPRESS”.

However, if you need to make your searches case-insensitive, you can easily toggle the option. This allows you to find and replace text regardless of its case.

For instance, enabling case-insensitive search would allow “WordPress,” “wordpress,” and “WORDPRESS” to be treated as the same.

4. Preview Before Making Changes

Worried about making mistakes? Preview all the changes before you save them. This feature ensures you get everything right the first time.

5. Replace Any Image in Your Media Library

Replacing images used in multiple places? No problem.

Switch to the ‘Replace Image’ tab, find your image, and click ‘Replace’. It’s that simple.

6. Track & Undo Changes

You can keep track of Search & Replace activity in the ‘History’ tab. This allows you to quickly review the changes you made and undo them with the click of a button.

Note: This feature is available with the paid plan with an introductory $30 discount.

6. Fast, Even on Large Websites

Performing site-wide search and replace operations consumes server resources, which could slow down or crash a website. Search & Replace Everything is designed to be fast and efficient, even if you have a larger website with tons of data.

With Search and Replace Everything, making bulk changes has never been easier.

What’s Coming Next?

Search & Replace Everything by WPCode provides an incredibly powerful tool for WordPress site owners.

It makes advanced database search and replacement operations quite simple for all users.

Before performing bulk updates, always create a fresh WordPress database backup. I recommend using Duplicator. It’s an easy way to back up your database and restore it with a single click if needed.

We’re truly building something special here. If you have ideas on how we can make the plugin more helpful to you, please send us your suggestions.

As always, thank you for your continued support of WPBeginner. We look forward to serving you for years to come.

Yours Truly,

Syed Balkhi

Founder of WPBeginner

The post Introducing Search & Replace Everything by WPCode: Bulk Editing in WordPress Made Easy first appeared on WPBeginner.

A Beginner’s Guide to the WordPress Interactivity API

Learn how to use the WordPress Interactivity API, why it’s essential, and how it simplifies adding interactive features to your blocks. Discover seamless integration with PHP server-side rendering and real-world examples of dynamic user experiences.

Learn how to use the WordPress Interactivity API, why it’s essential, and how it simplifies adding interactive features to your blocks. Discover seamless integration with PHP server-side rendering and real-world examples of dynamic user experiences. How to run a sale in WooCommerce

Case Insensitive CSS Attribute Selector

CSS selectors never cease to amaze me in how powerful they can be in matching complex patterns. Most of that flexibility is in parent/child/sibling relationships, very seldomly in value matching. Consider my surprise when I learned that CSS allows matching attribute values regardless off case!

Adding a {space}i to the attribute selector brackets will make the attribute value search case insensitive:

/* case sensitive, only matches "example" */

[class=example] {

background: pink;

}

/* case insensitive, matches "example", "eXampLe", etc. */

[class=example i] {

background: lightblue;

}

The use cases for this i flag are likely very limited, especially if this flag is knew knowledge for you and you’re used to a standard lower-case standard. A loose CSS classname standard will have and would continue to lead to problems, so use this case insensitivity flag sparingly!

The post Case Insensitive CSS Attribute Selector appeared first on David Walsh Blog.

Useful Email Newsletters For Designers

Struggling to keep our inboxes under control and aim for that magical state of inbox zero, the notification announcing an incoming email isn’t the most appreciated sound for many of us. However, there are some emails to actually look forward to: A newsletter, curated and written with love and care, can be a nice break in your daily routine, providing new insights and sparking ideas and inspiration for your work.

With so many wonderful design newsletters out there, we know it can be a challenge to decide which newsletter (or newsletters) to subscribe to. That’s why we want to shine a light on some newsletter gems today to make your decision at least a bit easier — and help you discover newsletters you might not have heard of yet. Ranging from design systems to UX writing, motion design, and user research, there sure is something in it for you.

A huge thank you to everyone who writes, edits, and publishes these newsletters to help us all get better at our craft. You are truly smashing! 👏🏼👏🏽👏🏾

Table of Contents

Below you’ll find quick jumps to newsletters on specific topics you might be interested in. Scroll down to browse the complete list or skip the table of contents.

- AI

- business

- career

- content strategy

- design

- design systems

- ethical design

- Figma

- front-end

- information architecture

- interaction design

- leadership

- product design

- sustainability

- user research

- UX

- UX writing

HeyDesigner

🗓 Delivered every Monday

🗓 Delivered every Monday

🖋 Written by Tamas Sari

Aimed at product people, UXers, PMs, and design engineers, the HeyDesigner newsletter is packed with a carefully curated selection of the latest design and front-end articles, tools, and resources.

Pixels of the Week

🗓 Delivered weekly

🗓 Delivered weekly

🖋 Written by Stéphanie Walter

Stéphanie Walter’s Pixels of the Week newsletter keeps you informed about the latest UX research, design, tech (HTML, CSS, SVG) news, tools, methods, and other resources that caught Stéphanie’s interest.

TLDR Design

🗓 Delivered daily

🗓 Delivered daily

🖋 Written by Dan Ni

You’re looking for some bite-sized design inspiration? TLDR Design is a daily newsletter highlighting news, tools, tutorials, trends, and inspiration for design professionals.

DesignOps

🗓 Delivered every two weeks

🗓 Delivered every two weeks

🖋 Written by Ch'an Armstrong

The DesignOps newsletter provides the DesignOps community with the best hand-picked articles all around design, code, AI, design tools, no-code tools, developer tools, and, of course, design ops.

Adam Silver’s Newsletter

🗓 Delivered weekly

🗓 Delivered weekly

🖋 Written by Adam Silver

Every week, Adam Silver sends out a newsletter aimed at designers, content designers, and front-end developers. It includes short and sweet, evidence-based design tips, mostly about forms UX, but not always.

Smashing Newsletter

🗓 Delivered every Tuesday

🗓 Delivered every Tuesday

🖋 Written by the Smashing Editorial team

Every Tuesday, we publish the Smashing Newsletter with useful tips and techniques on front-end and UX, covering everything from design systems and UX research to CSS and JavaScript. Each issue is curated, written, and edited with love and care, no third-party mailings or hidden advertising.

UX Design Weekly

🗓 Delivered every Monday

🗓 Delivered every Monday

🖋 Written by Kenny Chen

UX Design Weekly provides you with a weekly dose of hand-picked user experience design links. Every issue features articles, tools and resources, a UX portfolio, and a quote to spark ideas and get you thinking.

UX Collective

🗓 Delivered weekly

🗓 Delivered weekly

🖋 Written by Fabricio Teixeira and Caio Braga

“Designers are thinkers as much as they are makers.” Following this credo, the UX Collective newsletter helps designers think more critically about their work. Every issue highlights thought-provoking reads, little gems, tools, and resources.

Built For Mars

🗓 Delivered every few weeks

🗓 Delivered every few weeks

🖋 Written by Peter Ramsey

The Built for Mars newsletter brings Peter Ramsey’s UX research straight to your inbox. It includes in-depth UX case studies and bite-sized UX ideas and experiments.

NN Group

🗓 Delivered weekly

🗓 Delivered weekly

🖋 Written by the Nielsen Norman Group

Studying users around the world, the Nielsen Norman Group provides research-based UX guidance. If you don’t want to miss their latest articles and videos about usability, design, and UX research, you can subscribe to the NN/g newsletter to stay up-to-date.

UX Notebook

🗓 Delivered weekly

🗓 Delivered weekly

🖋 Written by Sarah Doody

The UX Notebook Newsletter is aimed at UX and product professionals who want to learn how to apply UX and design principles to design and grow their teams, products, and careers.

Smart Interface Design Patterns

🗓 Delivered weekly

🗓 Delivered weekly

🖋 Written by Vitaly Friedman

Every issue of the Smart Interface Design Patterns newsletter is dedicated to a common interface challenge and how to solve it to avoid issues down the line. A treasure chest of design patterns and UX techniques.

UX Weekly

🗓 Delivered weekly

🗓 Delivered weekly

🖋 Written by the Interaction Design Foundation

The Interaction Design Foundation is known for their UX courses and webinars for both aspiring designers and advanced professionals. Their UX Weekly newsletter delivers design tips and educational material to help you leverage the power of design.

Design With Care

🗓 Delivered every first Tuesday of a month

🗓 Delivered every first Tuesday of a month

🖋 Written by Alex Bilstein

Healthcare systems desparately need UX designers to improve the status quo for both healthcare professionals and patients. The Design With Care newsletter empowers UX designers to create better healthcare experiences and make an impact that matters.

The UX Gal

🗓 Delivered every Monday

🗓 Delivered every Monday

🖋 Written by Slater Katz

Whether you’re about to start your UX content education or want to get better at UX writing, The UX Gal newsletter is for you. Every Monday, Slater Katz sends out a new newsletter with prompts, thoughts, and exercises to build your UX writing and content design skills.

UX Content Collective

🗓 Delivered weekly

🗓 Delivered weekly

🖋 Written by the UX Content Collective

The newsletter by the UX Content Collective is perfect for anyone interested in content design. In it, you’ll find curated UX writing resources, new job openings, and exclusive discounts.

GatherContent

🗓 Delivered weekly

🗓 Delivered weekly

🖋 Written by the GatherContent team

The GatherContent newsletter is a weekly email full of content strategy goodies. It features articles, webinars and masterclasses, new books, free templates, and industry news.

User Research Academy

🗓 Delivered weekly

🗓 Delivered weekly

🖋 Written by Nikki Anderson

If you want to get more creative and confident when conducting user research, the User Research Academy might be for you. With carefully curated articles, podcasts, events, books, and academic resources all around user research, the newsletter is perfect for beginners and senior UX researchers alike.

User Weekly

🗓 Delivered weekly

🗓 Delivered weekly

🖋 Written by Jan Ahrend

What mattered in UX research this week? To keep you up-to-date on trends, methods, and insights across the UX research industry, Jan Ahrend captures the pulse of the UX research community in his User Weekly newsletter.

User Interviews

🗓 Delivered weekly

🗓 Delivered weekly

🖋 Written by the User Interviews team

The UX Research Newsletter by the folks at User Interviews delivers the latest UX research articles, reports, podcast episodes, and special features. For professional user researchers just like teams who need to conduct user research without a dedicated research team.

Baymard Institute

🗓 Delivered weekly

🗓 Delivered weekly

🖋 Written by the Baymard Institute

User experience, web design, and e-commerce are the topics which the Baymard Institute newsletter covers. It features ad-free full-length research articles to give you precious insights into the field.

Design Spells

🗓 Delivered every other Sunday

🗓 Delivered every other Sunday

🖋 Written by Chester How, Duncan Leo, and Rick Lee

Whether it’s micro-interactions or easter eggs, Design Spells celebrates the design details that feel like magic and add a spark of delight to a design.

Justin Volz’s Newsletter

🖋 Written by Justin Volz

🖋 Written by Justin Volz

Getting you ready for the future of motion design is the goal of Justin Volz’s newsletter. It features UX motion design trends, new UX motion design articles, and more to “make your UI tap dance.”

Design System Guide

🗓 Delivered weekly

🗓 Delivered weekly

🖋 Written by Romina Kavcic

Accompanying her interactive step-by-step guide to design systems, Romina Kavcic sends out the weekly Design System Guide newsletter on all things design systems, design process, and design strategy.

Figmalion

🗓 Delivered weekly

🗓 Delivered weekly

🖋 Written by Eugene Fedorenko

The Figmalion newsletter keeps you up-to-date on what is happening in the Figma community, with curated design resources and a weekly roundup of Figma and design tool news.

Informa(c)tion

🗓 Delivered every other Sunday

🗓 Delivered every other Sunday

🖋 Written by Jorge Arango

The Informa(c)tion newsletter explores the intersection of information, cognition, and design. Each issue includes an essay about information architecture and/or personal knowledge management and a list of interesting links.

Product Design Challenges

🗓 Delivered weekly

🗓 Delivered weekly

🖋 Written by Artiom Dashinsky

How about a weekly design challenge to work on your core design skills, improve your portfolio, or prepare for your next job interview? The Weekly Product Design Challenges newsletter has got you covered. Every week, Artiom Dashinsky shares a new exercise inspired and used by companies like Facebook, Google, and WeWork to interview UX design candidates.

Fundament

🗓 Delivered every other Thursday

🗓 Delivered every other Thursday

🖋 Written by Arkadiusz Radek and Mateusz Litarowicz

With Fundament, Arkadiusz Radek and Mateusz Litarowicz created a place to share what they’ve learned in their ten-year UX and Product Design careers. The newsletter is about the things that matter in design, the practicalities of the job, the lesser-known bits, and content that will help you grow as a UX or Product Designer.

Case Study Club

🗓 Delivered weekly

🗓 Delivered weekly

🖋 Written by Jan Haaland

How do people design digital products? With curated UX case studies, the Case Study Club newsletter grants insights into other designers’ processes.

Ethical Design Network

🗓 Delivered monthly

🗓 Delivered monthly

🖋 Written by Trine Falbe

The Ethical Design Network is a space for digital professionals to share, discuss, and self-educate about ethical design. You can sign up to the newsletter to receive monthly news, resources, and event updates all around ethical design.

Sustainable UX

🗓 Delivered monthly

🗓 Delivered monthly

🖋 Written by Thorsten Jonas

As designers, we have to take responsibility for more than our users. Shining a light on how to design and build more sustainable digital products, the SUX Newsletter by the Sustainable UX Network helps you stand up to that responsibility.

AI Goodies

🗓 Delivered weekly

🗓 Delivered weekly

🖋 Written by Ioana Teleanu

A brand-new newsletter on AI, design, and UX goodies comes from Ioana Teleanu: AI Goodies. Every week, it covers the latest resources, trends, news, and tools from the world of AI.

d.MBA

🗓 Delivered weekly

🗓 Delivered weekly

🖋 Written by Alen Faljic

Learning business can help you become a better designer. The d.MBA newsletter is your weekly source of briefings from the business world, hand-picked for the design community by Alen Faljic and the d.MBA team.

Dan Mall Teaches

🗓 Delivered weekly

🗓 Delivered weekly

🖋 Written by Dan Mall

Tips, tricks, and tools about design systems, process, and leadership, delivered to your inbox every week. That’s the Dan Mall Teaches newsletter.

Stratatics

🗓 Delivered weekly

🗓 Delivered weekly

🖋 Written by Ryan Rumsey

To do things differently, you must look at your work in a new light. That’s the idea behind the Stratatics newsletter. Each week, Ryan Rumsey provides design leaders and executives (and those who work alongside them) with a new idea to reimagine and deliver their best work.

Do you have a favorite newsletter that isn’t featured in the post? Or maybe you’re writing and publishing a newsletter yourself? We’d love to hear about it in the comments below!

Chris Corner: Git it

Julia Evans has released what she’s saying is one of her most popular zines to date: How Git Works.

I don’t think you’d regret reading it. I imagine most of us get by with knowing just enough Git to do our jobs, but are probably using 5% of what it can really do. Being very strong with Git will almost surely benefit you in your career. Imagine helping a superior out of a sticky situation where it might look like code was lost or otherwise screwed up. Being the solution during an emotional time is clutch. Surely this pairs nicely with Oh Shit, Git!, a real classic from Katie-Sylor Miller which I see has been revitalized with Julia here.

Just the other day here at CodePen Headquarters, I saw a co-worker solve an issue with git bisect. Have you even heard of that?! Imagine there is a bug in your code, but you have absolutely no idea when it happened or where in the code it might be. That’s not a good feeling, but it’s exactly where git bisect comes in. As best I understand it, it sets the HEAD of your repo back in time some amount, and there, you test if the bug is present and you can say git bisect good or git bisect bad. Then it moves the HEAD and you keep testing and eventually it gets closer and closer to the exact commit (or at least a range of commits) where the bug happened. Then you can look at the changed files in those commits and figure out where in the code the bug may have came from. So cool!

I certainly know developers who know Git and work with it exclusively at the command line entirely as-provided. But I find it more common among the command line types that they at least have some aliases set up for the most common things they do. Those might be their own aliases, like they’ll make gco do a git checkout, but it’s worth knowing git itself allows you to make aliases within itself, which could be good since they won’t conflict with anything else. (Have I told you how long I had cp aliased to move to our local CodePen project directory? 🤣).

A much more elaborate take on git aliases is called Gut. With it, you don’t git commit anything (with all the params and whatnot you have to also pass), you gut save which launches a little wizard that asks you questions, and then it does the proper git stuff with the information you give it.

I could see that being great for a beginner, but maybe feel a little too slow as you get more comfortable at the command line. Except when it comes to the more advanced stuff and how it looks designed to get you out of binds. The fix and undo commands like awfully helpful and are the kind of things where I can never remember the proper commands.

Paweł Grzybek lays out a classic situation:

Let’s say that we are halfway through the feature, intensely focused on a task, when a critical bug needs to be fixed out of the blue. Happens to us all the time! Should we stash the current changes? Should we quickly smash

git add . && git commit -m "wip"and promise that we will sort this mess out later?

His answer is no, it’s using git worktrees. It solves the issue by literally making another copy of your project on disk. And you can open and work on it separately but it all goes to the same repo ultimately. So you can leave your half-done uncommitted work on another worktree while you hop to the other to do work. Me, I’m mostly cool with git stash to tuck stuff away while I go work on something else, or even just the ol “work in progress” or “saving work” commit like Paweł mentioned. It’s not pretty but culturally it’s fine on our project. But I can see how you could get into a groove with worktrees, particularly if your editor supports it nicely.

Phew! We probably talked about Git too much, eh? I know nobody cares. Now let’s go back to just doing the 3-4 commands we know everyday, just with a few more resources in our pocket when we need them.

I gotta leave you with something else. (Digs through bag of hot links.) Ah here we go. This video rules: Flash is dead so I rebuilt it with javascript. Andrew Jakubowicz walks us through building an interface with a pretty modern and lightweight set of tools. Andrew works for Google on Lit, so it’s sort of a big excuse to show off working with Web Components, but it’s a fun ride. At 8 minutes more happens than a typical hour long video.

How to Set Date Time from Mac Command Line

Working on a web extension that ships to an app store and isn’t immediately modifiable, like a website, can be difficult. Since you cannot immediately deploy updates, you sometimes need to bake in hardcoded date-based logic. Testing future dates can be difficult if you don’t know how to quickly change the date on your local machine.

To change the current date on your Mac, execute the following from command line:

# Date Format: MMDDYYYY sudo date -I 06142024

This command does not modify time, only the current date. Using the same command to reset to current date is easy as well!

The post How to Set Date Time from Mac Command Line appeared first on David Walsh Blog.

Create a Striking Portfolio with These Free Webflow Templates

Are you ready to take your portfolio game to the next level? Let’s dive into the exciting world of Webflow templates for creating stunning portfolios! As a web designer, I can vouch for the game-changing benefits these templates bring to the table. Imagine having access to professionally designed layouts and functionalities without spending a dime. That’s the power of free Webflow portfolio templates.

Webflow templates offer a treasure trove of features that can make your portfolio shine. These templates are perfect for designers who want to create impressive designs with dynamic animations and responsive layouts. With just a few clicks, you can customize these templates to reflect your unique style and creativity. Of course! Webflow templates are a great way to save time and create a professional, polished look for your website. They make it easy to showcase your work without getting bogged down in coding and design details. So, why settle for mediocrity when you can dazzle with a Webflow portfolio template?

Advantages of using free templates for creating a striking portfolio

Using free Webflow portfolio templates for your portfolio website comes with a plethora of benefits. These templates serve as a solid foundation that jumpstarts your design process, saving you time and effort in crafting a stunning portfolio. They offer a wide range of design options, from sleek and modern layouts to vibrant and creative designs. With these templates, you can easily customize colors, fonts, and layouts to match your style and showcase your work effectively.

One major advantage is that free Webflow templates are designed by professionals with user experience in mind, ensuring that your portfolio is not only visually appealing but also easy to navigate for your viewers. Additionally, these templates are responsive by nature, meaning your portfolio will look fantastic on any device, whether it’s a desktop, tablet, or smartphone. This adaptability ensures that your work shines across various platforms, attracting potential clients and employers to your impressive design skills without missing a beat.

Benefits of using Webflow templates for portfolio creation

When it comes to building a standout portfolio, using Webflow templates is like having a secret weapon in your design arsenal. These templates offer a plethora of benefits for creating a top-notch portfolio website. First off, they are incredibly user-friendly, making the design process smooth and stress-free. With pre-built layouts and elements, you can save valuable time and focus on showcasing your work in the best possible light.

Additionally, Webflow templates are highly customizable, allowing you to tailor every aspect of your portfolio to fit your unique style and vision. This level of flexibility ensures that your portfolio stands out from the rest and leaves a lasting impression on visitors. Moreover, the responsive nature of Webflow templates guarantees that your portfolio looks stunning on any device, from desktops to smartphones.

Overall, using Webflow templates for portfolio creation not only saves time and effort but also elevates the visual appeal and functionality of your website effortlessly. With these templates, you can create a professional and polished portfolio that speaks volumes about your design expertise.

Tips for maximizing the impact of your portfolio with free templates

When it comes to making the most out of your portfolio using free Webflow templates, remember to showcase your best work first. Grab the viewer’s attention with a stunning homepage that highlights your top projects. Keep it simple and clean to ensure your designs shine through without distractions. Additionally, personalize the template to match your style and brand identity. Add a touch of creativity by incorporating unique elements that reflect your personality.

Don’t forget to optimize your images for faster loading times, as nobody likes waiting around for a portfolio to load. Use high-quality visuals that clearly demonstrate your skills and expertise. And lastly, make sure your portfolio is easy to navigate. Organize your projects into categories or sections, making it effortless for visitors to explore and discover your creations with ease. With these tips, you can maximize the impact of your portfolio and leave a lasting impression on potential clients and employers.

In conclusion, using free Webflow portfolio templates is a game-changer for any aspiring web designer. These templates provide a solid foundation to kickstart your portfolio design journey without breaking a sweat. With a wide range of customizable options at your fingertips, crafting a visually stunning and user-friendly portfolio has never been easier. The convenience and flexibility of Webflow templates allow you to showcase your work in the best light possible, impressing potential clients and employers with your creative prowess. So, why make things harder for yourself when you can take advantage of these free resources to elevate your portfolio to new heights? Embrace the power of Webflow templates and watch your design dreams come to life with style and ease. Your portfolio deserves to stand out, and with Webflow, that’s exactly what it will do.

See also

yrFolio – Portfolio Website Template

Matteo Fabbiani – Personal Portfolio Web Template

Personal Portfolio Webflow Website Template

Relume Portfolio Webflow Template

Solveig Portfolio Template

Portfolio Template for Architecture

Student Portfolio

Portfolio Website Template

SkillSet – Minimal Portfolio Template

Dante Portfolio Webflow Template

Free Personal Portfolio Web Template

Product Design Portfolio Template

Photographer’s Portfolio Template

Free Minimalist Portfolio Webflow Template

Uncommon Portfolio Web Template

Indi Harris

Douglas Pinho Portfolio

Hire Flemming

QuickSnap Photographer Template

Overflow

Darren Harroff

The post Create a Striking Portfolio with These Free Webflow Templates appeared first on CSS Author.

What Are CSS Container Style Queries Good For?

We’ve relied on media queries for a long time in the responsive world of CSS but they have their share of limitations and have shifted focus more towards accessibility than responsiveness alone. This is where CSS Container Queries come in. They completely change how we approach responsiveness, shifting the paradigm away from a viewport-based mentality to one that is more considerate of a component’s context, such as its size or inline-size.

Querying elements by their dimensions is one of the two things that CSS Container Queries can do, and, in fact, we call these container size queries to help distinguish them from their ability to query against a component’s current styles. We call these container style queries.

Existing container query coverage has been largely focused on container size queries, which enjoy 90% global browser support at the time of this writing. Style queries, on the other hand, are only available behind a feature flag in Chrome 111+ and Safari Technology Preview.

The first question that comes to mind is What are these style query things? followed immediately by How do they work?. There are some nice primers on them that others have written, and they are worth checking out.

But the more interesting question about CSS Container Style Queries might actually be Why we should use them? The answer, as always, is nuanced and could simply be it depends. But I want to poke at style queries a little more deeply, not at the syntax level, but what exactly they are solving and what sort of use cases we would find ourselves reaching for them in our work if and when they gain browser support.

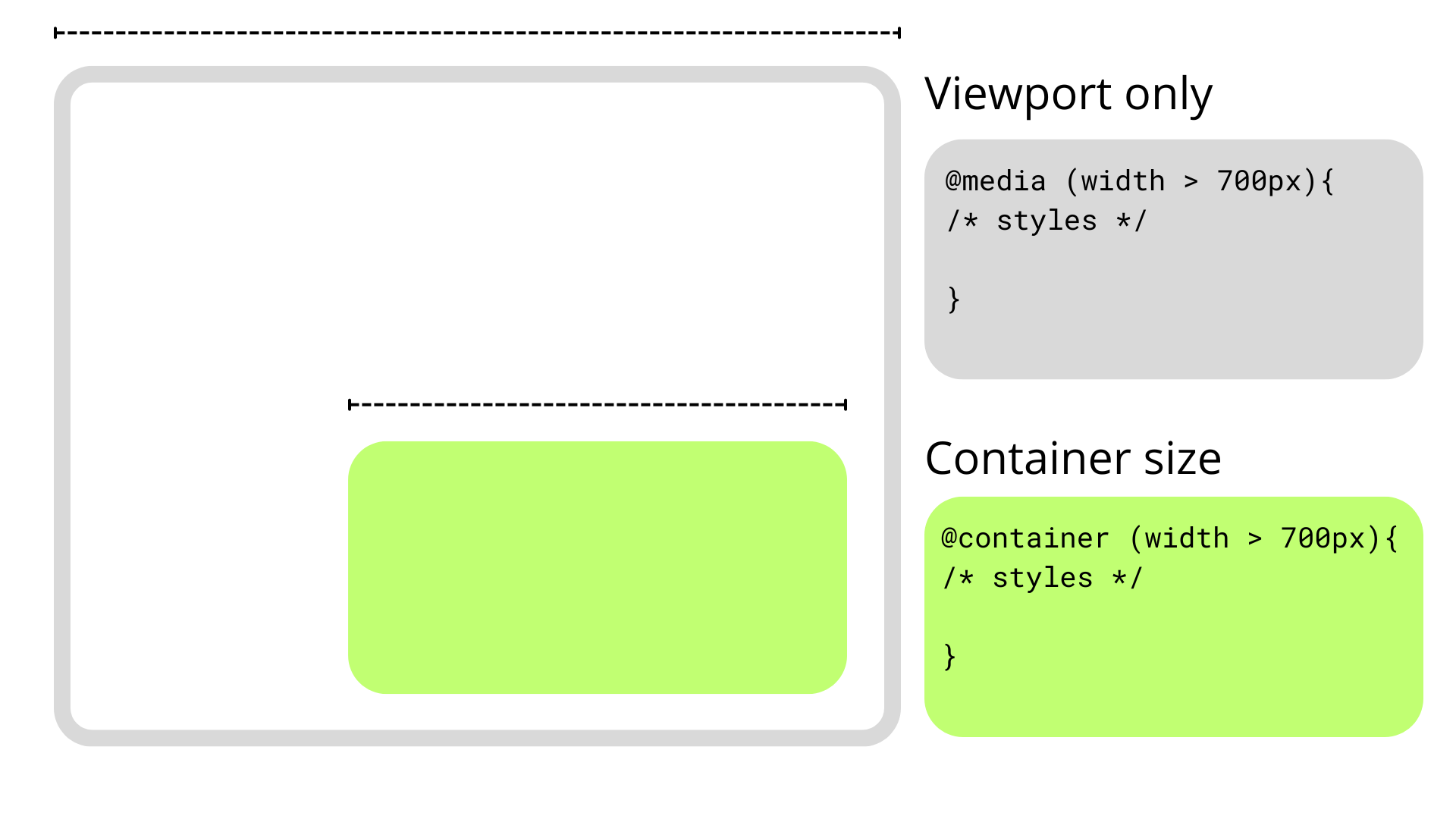

Why Container QueriesTalking purely about responsive design, media queries have simply fallen short in some Aspects, but I think the main one is that they are context-agnostic in the sense that they only consider the viewport size when applying styles without involving the size or dimensions of an element’s parent or the content it contains.

This usually isn’t a problem since we only have a main element that doesn’t share space with others along the x-axis, so we can style our content depending on the viewport’s dimensions. However, if we stuff an element into a smaller parent and maintain the same viewport, the media query doesn’t kick in when the content becomes cramped. This forces us to write and manage an entire set of media queries that target super-specific content breakpoints.

Container queries break this limitation and allow us to query much more than the viewport’s dimensions.

Container size queries work similarly to media queries but allow us to apply styles depending on the container’s properties and computed values. In short, they allow us to make style changes based on an element’s computed width or height regardless of the viewport. This sort of thing was once only possible with JavaScript or the ol’ jQuery, as this example shows.

As noted earlier, though, container queries can query an element’s styles in addition to its dimensions. In other words, container style queries can look at and track an element’s properties and apply styles to other elements when those properties meet certain conditions, such as when the element’s background-color is set to hsl(0 50% 50%).

That’s what we mean when talking about CSS Container Style Queries. It’s a proposed feature defined in the same CSS Containment Module Level 3 specification as CSS Container Size Queries — and one that’s currently unsupported by any major browser — so the difference between style and size queries can get a bit confusing as we’re technically talking about two related features under the same umbrella.

We’d do ourselves a favor to backtrack and first understand what a “container” is in the first place.

Containers

An element’s container is any ancestor with a containment context; it could be the element’s direct parent or perhaps a grandparent or great-grandparent.

A containment context means that a certain element can be used as a container for querying. Unofficially, you can say there are two types of containment context: size containment and style containment.

Size containment means we can query and track an element’s dimensions (i.e., aspect-ratio, block-size, height, inline-size, orientation, and width) with container size queries as long as it’s registered as a container. Tracking an element’s dimensions requires a little processing in the client. One or two elements are a breeze, but if we had to constantly track the dimensions of all elements — including resizing, scrolling, animations, and so on — it would be a huge performance hit. That’s why no element has size containment by default, and we have to manually register a size query with the CSS container-type property when we need it.

On the other hand, style containment lets us query and track the computed values of a container’s specific properties through container style queries. As it currently stands, we can only check for custom properties, e.g. --theme: dark, but soon we could check for an element’s computed background-color and display property values. Unlike size containment, we are checking for raw style properties before they are processed by the browser, alleviating performance and allowing all elements to have style containment by default.

Did you catch that? While size containment is something we manually register on an element, style containment is the default behavior of all elements. There’s no need to register a style container because all elements are style containers by default.

And how do we register a containment context? The easiest way is to use the container-type property. The container-type property will give an element a containment context and its three accepted values — normal, size, and inline-size — define which properties we can query from the container.

/* Size containment in the inline direction */

.parent {

container-type: inline-size;

}

This example formally establishes a size containment. If we had done nothing at all, the .parent element is already a container with a style containment.

Size Containment

That last example illustrates size containment based on the element’s inline-size, which is a fancy way of saying its width. When we talk about normal document flow on the web, we’re talking about elements that flow in an inline direction and a block direction that corresponds to width and height, respectively, in a horizontal writing mode. If we were to rotate the writing mode so that it is vertical, then “inline” would refer to the height instead and “block” to the width.

Consider the following HTML:

<div class="cards-container">

<ul class="cards">

<li class="card"></li>

</ul>

</div>

We could give the .cards-container element a containment context in the inline direction, allowing us to make changes to its descendants when its width becomes too small to properly display everything in the current layout. We keep the same syntax as in a normal media query but swap @media for @container

.cards-container {

container-type: inline-size;

}

@container (width < 700px) {

.cards {

background-color: red;

}

}

Container syntax works almost the same as media queries, so we can use the and, or, and not operators to chain different queries together to match multiple conditions.

@container (width < 700px) or (width > 1200px) {

.cards {

background-color: red;

}

}

Elements in a size query look for the closest ancestor with size containment so we can apply changes to elements deeper in the DOM, like the .card element in our earlier example. If there is no size containment context, then the @container at-rule won’t have any effect.

/* 👎

* Apply styles based on the closest container, .cards-container

*/

@container (width < 700px) {

.card {

background-color: black;

}

}

Just looking for the closest container is messy, so it’s good practice to name containers using the container-name property and then specifying which container we’re tracking in the container query just after the @container at-rule.

.cards-container {

container-name: cardsContainer;

container-type: inline-size;

}

@container cardsContainer (width < 700px) {

.card {

background-color: #000;

}

}

We can use the shorthand container property to set the container name and type in a single declaration:

.cards-container {

container: cardsContainer / inline-size;

/* Equivalent to: */

container-name: cardsContainer;

container-type: inline-size;

}

The other container-type we can set is size, which works exactly like inline-size — only the containment context is both the inline and block directions. That means we can also query the container’s height sizing in addition to its width sizing.

/* When container is less than 700px wide */

@container (width < 700px) {

.card {

background-color: black;

}

}

/* When container is less than 900px tall */

@container (height < 900px) {

.card {

background-color: white;

}

}

And it’s worth noting here that if two separate (not chained) container rules match, the most specific selector wins, true to how the CSS Cascade works.

So far, we’ve touched on the concept of CSS Container Queries at its most basic. We define the type of containment we want on an element (we looked specifically at size containment) and then query that container accordingly.

Container Style QueriesThe third value that is accepted by the container-type property is normal, and it sets style containment on an element. Both inline-size and size are stable across all major browsers, but normal is newer and only has modest support at the moment.

I consider normal a bit of an oddball because we don’t have to explicitly declare it on an element since all elements are style containers with style containment right out of the box. It’s possible you’ll never write it out yourself or see it in the wild.

.parent {

/* Unnecessary */

container-type: normal;

}

If you do write it or see it, it’s likely to undo size containment declared somewhere else. But even then, it’s possible to reset containment with the global initial or revert keywords.

.parent {

/* All of these (re)set style containment */

container-type: normal;

container-type: initial;

container-type: revert;

}

Let’s look at a simple and somewhat contrived example to get the point across. We can define a custom property in a container, say a --theme.

.cards-container {

--theme: dark;

}

From here, we can check if the container has that desired property and, if it does, apply styles to its descendant elements. We can’t directly style the container since it could unleash an infinite loop of changing the styles and querying the styles.

.cards-container {

--theme: dark;

}

@container style(--theme: dark) {

.cards {

background-color: black;

}

}

See that style() function? In the future, we may want to check if an element has a max-width: 400px through a style query instead of checking if the element’s computed value is bigger than 400px in a size query. That’s why we use the style() wrapper to differentiate style queries from size queries.

/* Size query */

@container (width > 60ch) {

.cards {

flex-direction: column;

}

}

/* Style query */

@container style(--theme: dark) {

.cards {

background-color: black;

}

}

Both types of container queries look for the closest ancestor with a corresponding containment-type. In a style() query, it will always be the parent since all elements have style containment by default. In this case, the direct parent of the .cards element in our ongoing example is the .cards-container element. If we want to query non-direct parents, we will need the container-name property to differentiate between containers when making a query.

.cards-container {

container-name: cardsContainer;

--theme: dark;

}

@container cardsContainer style(--theme: dark) {

.card {

color: white;

}

}

Style queries are completely new and bring something never seen in CSS, so they are bound to have some confusing qualities as we wrap our heads around them — some that are completely intentional and well thought-out and some that are perhaps unintentional and may be updated in future versions of the specification.

Style and Size Containment Aren’t Mutually Exclusive

One intentional perk, for example, is that a container can have both size and style containment. No one would fault you for expecting that size and style containment are mutually exclusive concerns, so setting an element to something like container-type: inline-size would make all style queries useless.

However, another funny thing about container queries is that elements have style containment by default, and there isn’t really a way to remove it. Check out this next example:

.cards-container {

container-type: inline-size;

--theme: dark;

}

@container style(--theme: dark) {

.card {

background-color: black;

}

}

@container (width < 700px) {

.card {

background-color: red;

}

}

See that? We can still query the elements by style even when we explicitly set the container-type to inline-size. This seems contradictory at first, but it does make sense, considering that style and size queries are computed independently. It’s better this way since both queries don’t necessarily conflict with each other; a style query could change the colors in an element depending on a custom property, while a container query changes an element’s flex-direction when it gets too small for its contents.

But We Can Achieve the Same Thing With CSS Classes and IDs

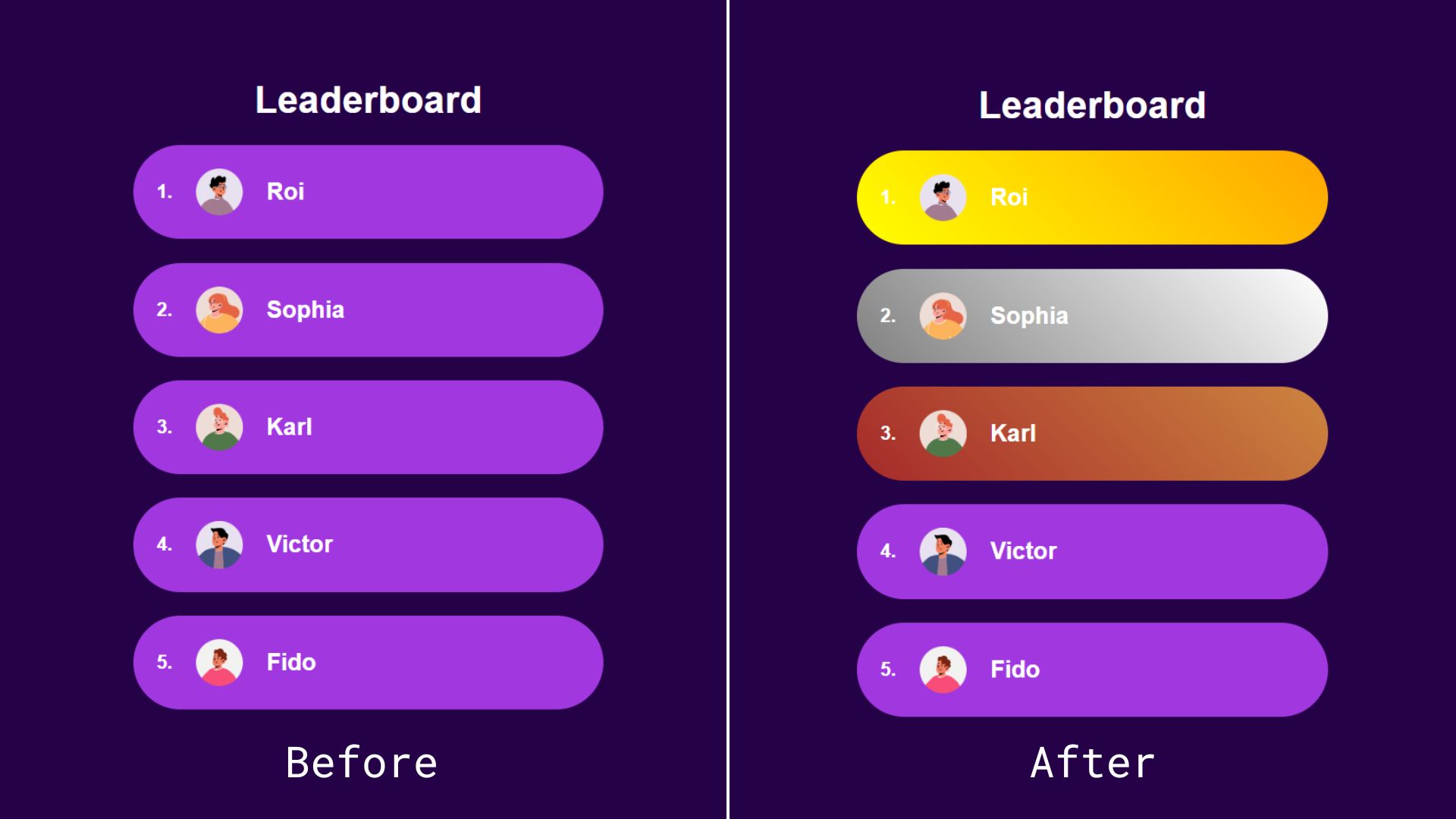

Most container query guides and tutorials I’ve seen use similar examples to demonstrate the general concept, but I can’t stop thinking no matter how cool style queries are, we can achieve the same result using classes or IDs and with less boilerplate. Instead of passing the state as an inline style, we could simply add it as a class.

<ol>

<li class="item first">

<img src="..." alt="Roi's avatar" />

<h2>Roi</h2>

</li>

<li class="item second"><!-- etc. --></li>

<li class="item third"><!-- etc. --></li>

<li class="item"><!-- etc. --></li>

<li class="item"><!-- etc. --></li>

</ol>

Alternatively, we could add the position number directly inside an id so we don’t have to convert the number into a string:

<ol>

<li class="item" id="item-1">

<img src="..." alt="Roi's avatar" />

<h2>Roi</h2>

</li>

<li class="item" id="item-2"><!-- etc. --></li>

<li class="item" id="item-3"><!-- etc. --></li>

<li class="item" id="item-4"><!-- etc. --></li>

<li class="item" id="item-5"><!-- etc. --></li>

</ol>

Both of these approaches leave us with cleaner HTML than the container queries approach. With style queries, we have to wrap our elements inside a container — even if we don’t semantically need it — because of the fact that containers (rightly) are unable to style themselves.

We also have less boilerplate-y code on the CSS side:

#item-1 {

background: linear-gradient(45deg, yellow, orange);

}

#item-2 {

background: linear-gradient(45deg, grey, white);

}

#item-3 {

background: linear-gradient(45deg, brown, peru);

}

See the Pen Style Queries Use Case Replaced with Classes [forked] by Monknow.

As an aside, I know that using IDs as styling hooks is often viewed as a no-no, but that’s only because IDs must be unique in the sense that no two instances of the same ID are on the page at the same time. In this instance, there will never be more than one first-place, second-place, or third-place player on the page, making IDs a safe and appropriate choice in this situation. But, yes, we could also use some other type of selector, say a data-* attribute.

There is something that could add a lot of value to style queries: a range syntax for querying styles. This is an open feature that Miriam Suzanne proposed in 2023, the idea being that it queries numerical values using range comparisons just like size queries.

Imagine if we wanted to apply a light purple background color to the rest of the top ten players in the leaderboard example. Instead of adding a query for each position from four to ten, we could add a query that checks a range of values. The syntax is obviously not in the spec at this time, but let’s say it looks something like this just to push the point across:

/* Do not try this at home! */

@container leaderboard style(4 >= --position <= 10) {

.item {

background: linear-gradient(45deg, purple, fuchsia);

}

}

In this fictional and hypothetical example, we’re:

- Tracking a container called

leaderboard, - Making a

style()query against the container, - Evaluating the

--positioncustom property, - Looking for a condition where the custom property is set to a value equal to a number that is greater than or equal to

4and less than or equal to10. - If the custom property is a value within that range, we set a player’s background color to a

linear-gradient()that goes frompurpletofuschia.