Drew McLellan puts the chairs up on the tables, sweeps the floor, and closes off our season, and indeed the entire 24 ways project with a look back at what it’s meant to run this site as a site project, and what impact side projects can have on the work we do. Will the last one out turn off Christmas the lights?

Fifteen years ago, on a bit of a whim, I decided it would be fun to have a Web Standards version of something like the Perl Advent calendar. A simple website with a new tip or trick each day leading the readers through December up until Christmas.

I emailed a bunch of friends that kept web design and development themed blogs (remember those?) suggesting the idea and asking if they’d like to contribute. My vision had been that each post would be a couple of paragraphs of information. A small nugget of an idea, or a tip, or a suggestion. What happened was something really amazing. I began to receive really insightful blog posts containing some of the most valuable writing I’d seen online all year.

Look at this piece from Ethan Marcotte on Centered Tabs with CSS, or this detailed piece on scripting block quotes from Jeremy Keith. I was blown away, and the scene was set.

Collaboration

What I hadn’t anticipated in 2005 was that this little side project would turn into a fixture of the industry calendar, would introduce me to a raft of field experts, and would have me working with an eclectic team of collaborators for fifteen long seasons.

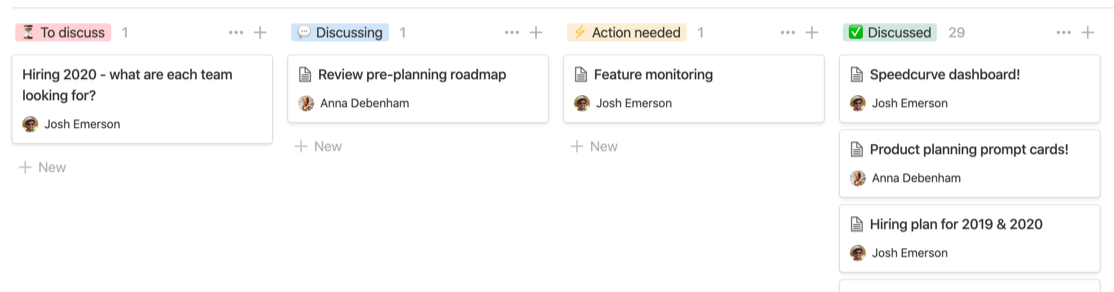

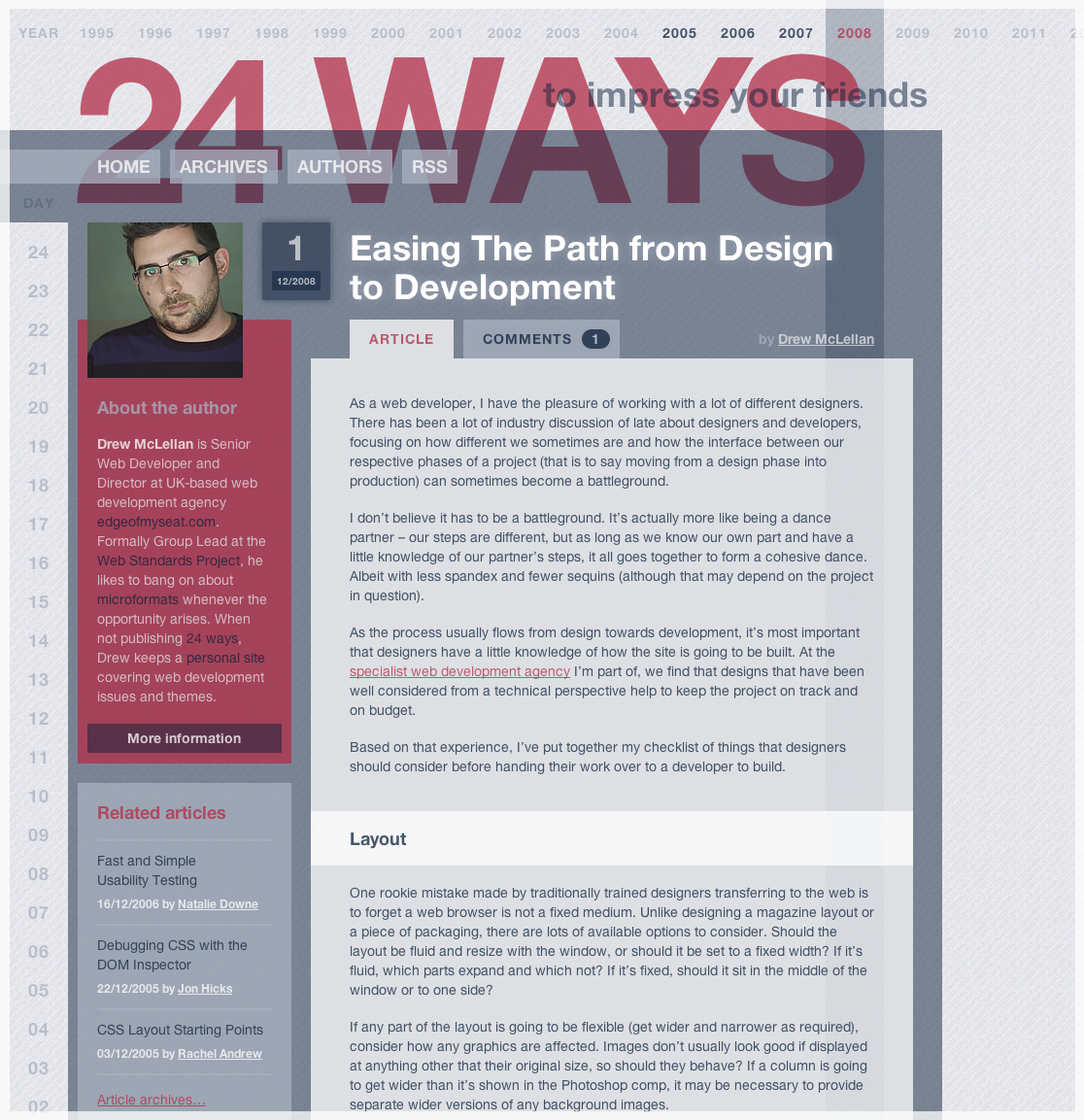

And that last point is crucial. I’ve by no means produced this alone. Rachel Andrew has been a constant supporter in helping each year to see the light of day and producing our ebooks. After a couple of years, Brian Suda stepped in to help me plan and select authors. In 2008, I managed to persuade Tim Van Damme to replace my very basic site design with something altogether more fitting. In 2010, Anna Debenham came on board initially to help with the production of articles, but rapidly became a co-producer working with me on all aspects of the content. Owen Gregory joined up that same year to help with the proofing and editing of articles, and for many years did a fantastic job writing the home page article teasers, which are now but a shadow of their former selves.

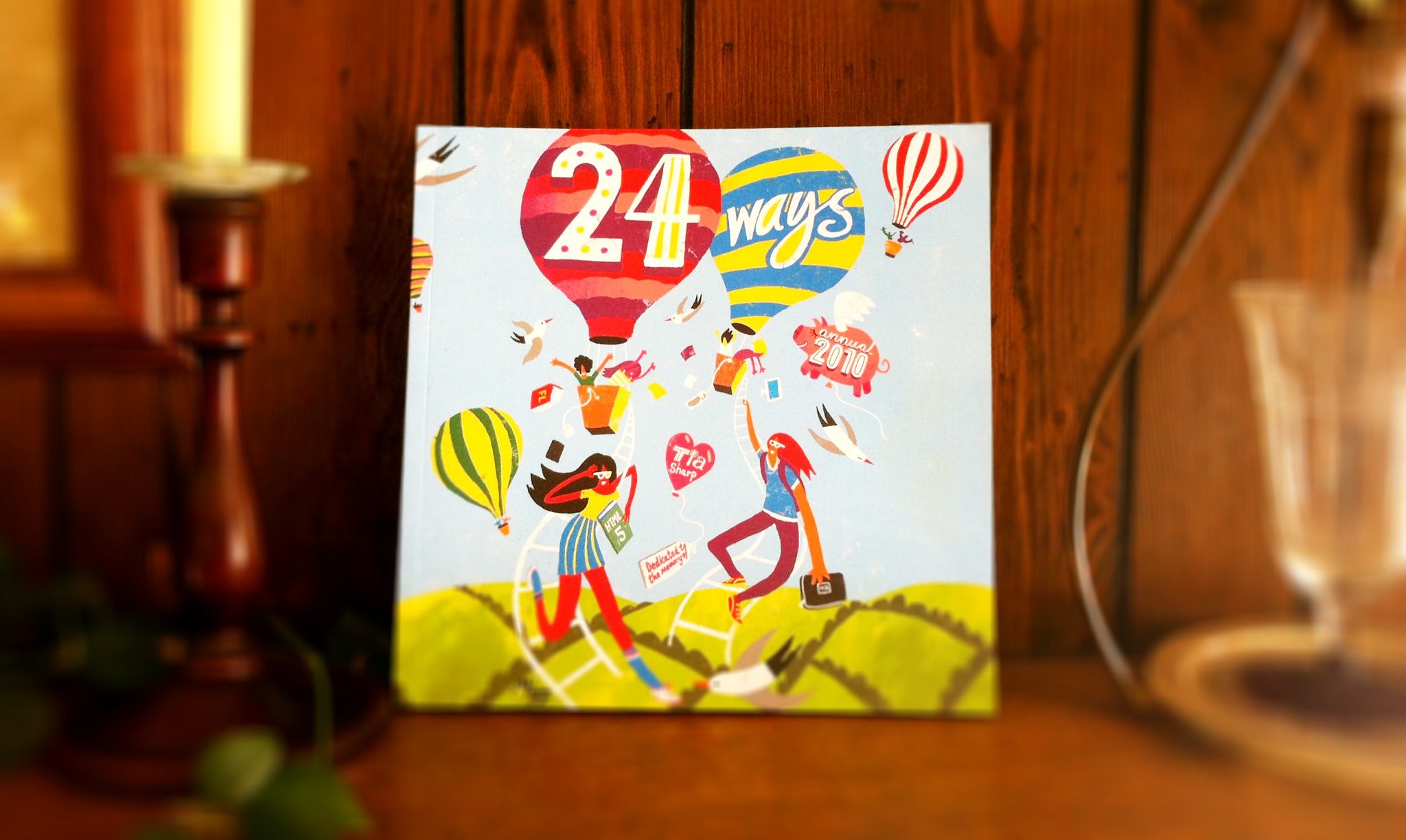

Also in 2010, we produced a book in collaboration with Five Simple Steps and raising funds in the memory of Remy and Julie’s daughter, Tia Sharp.

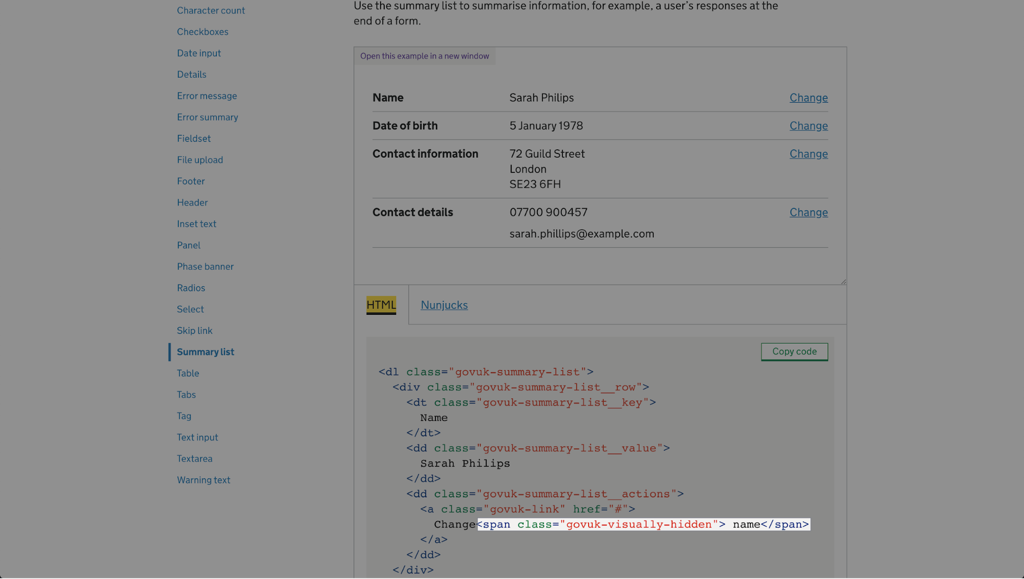

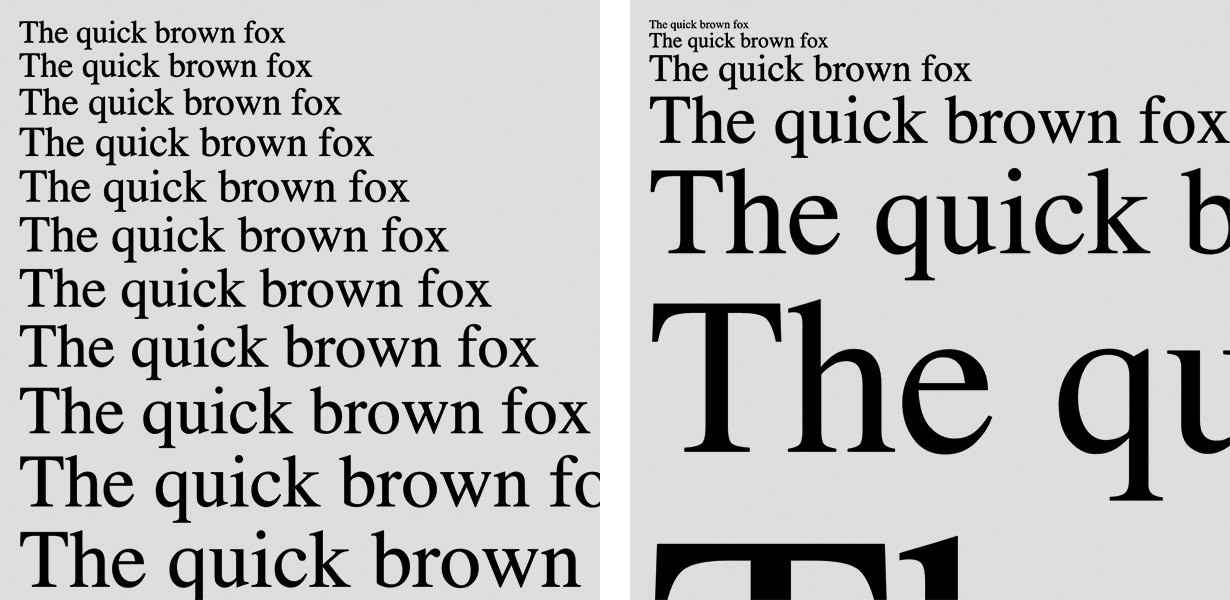

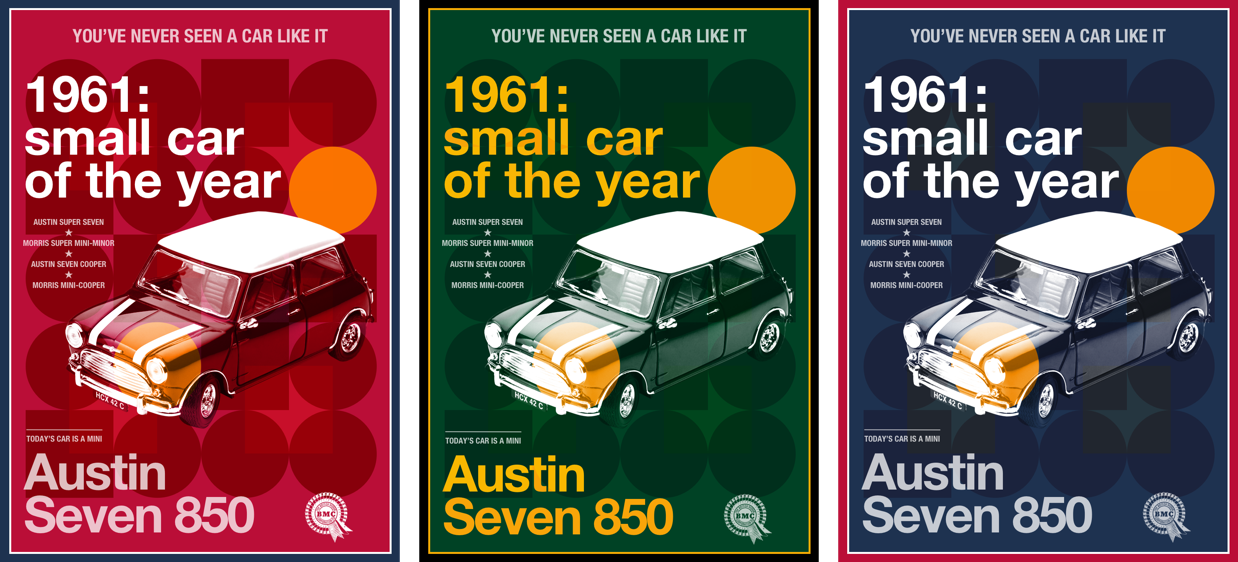

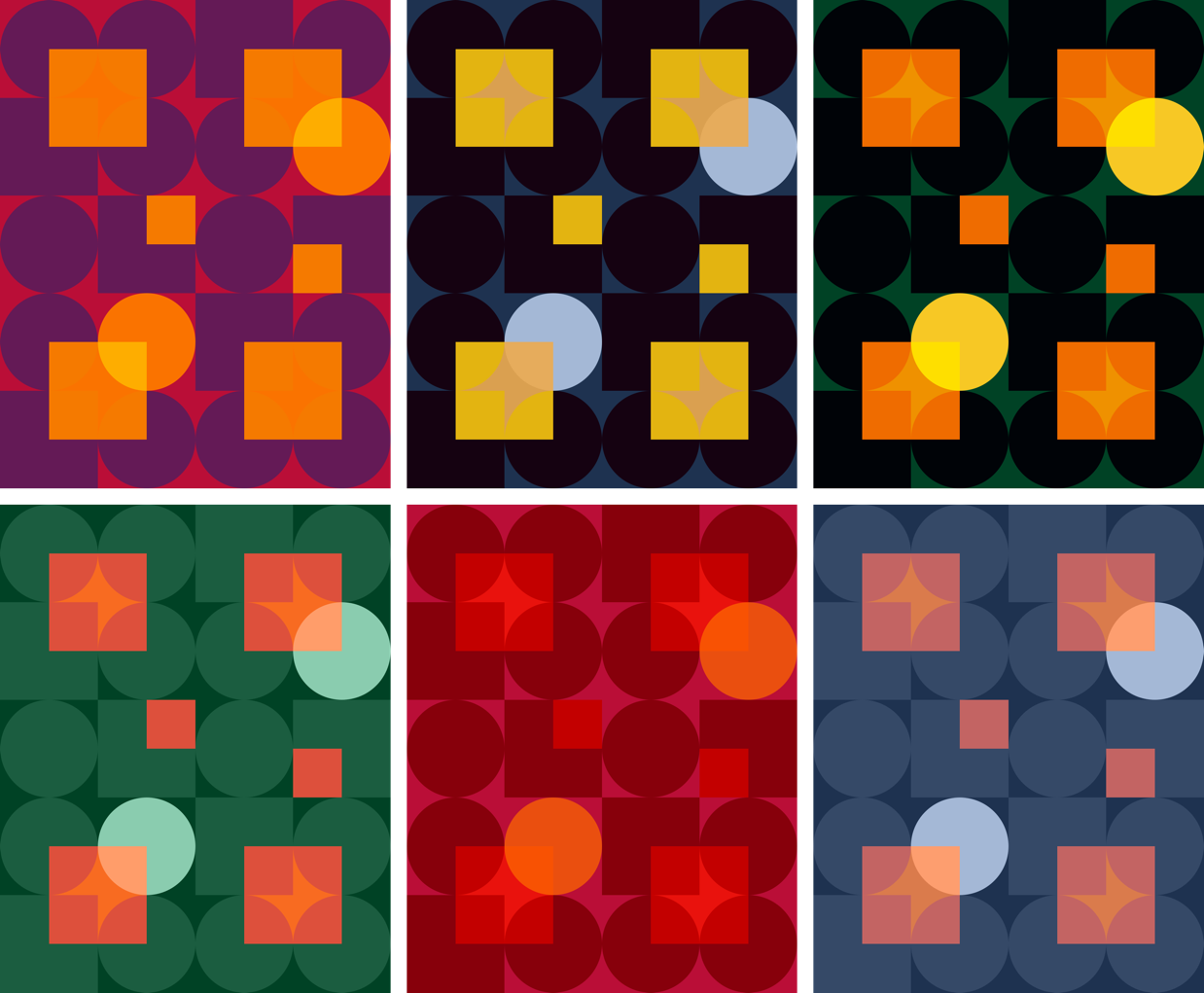

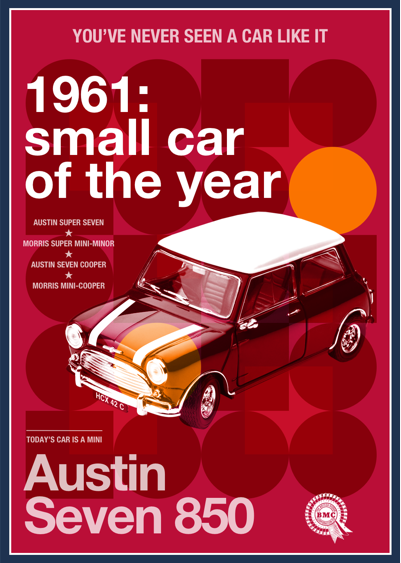

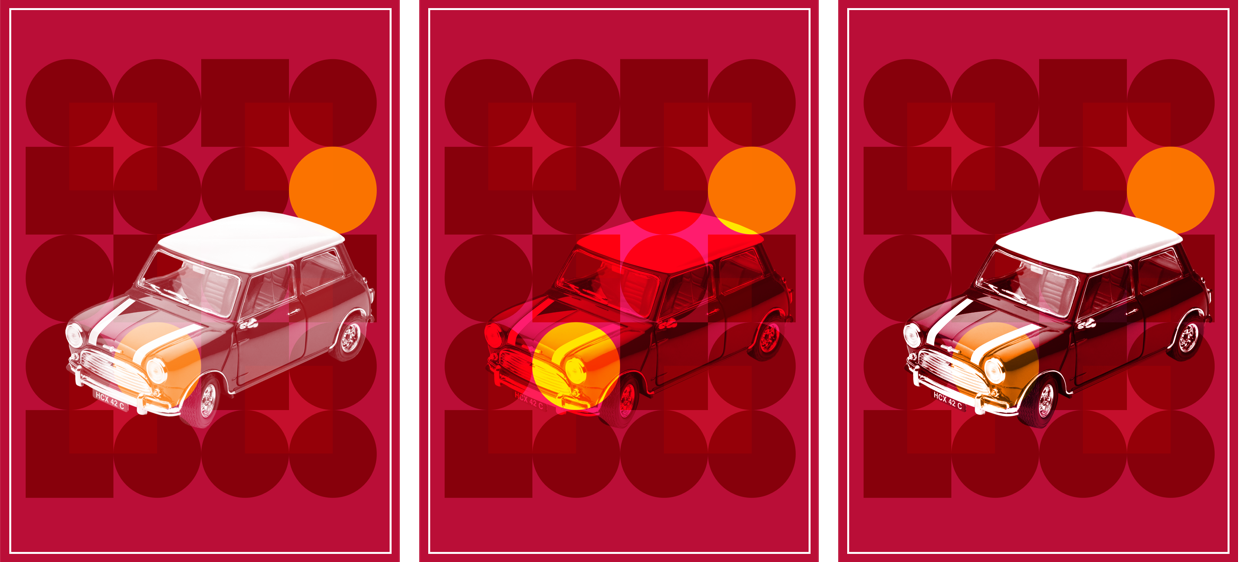

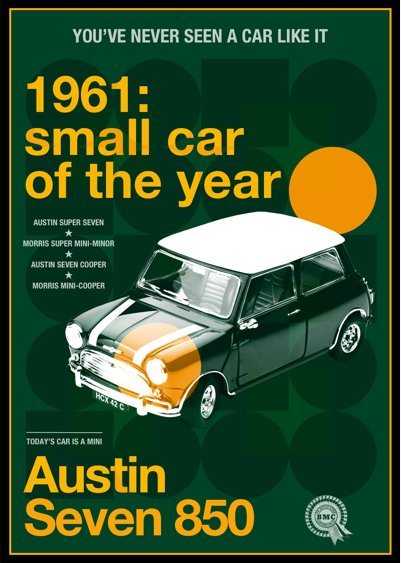

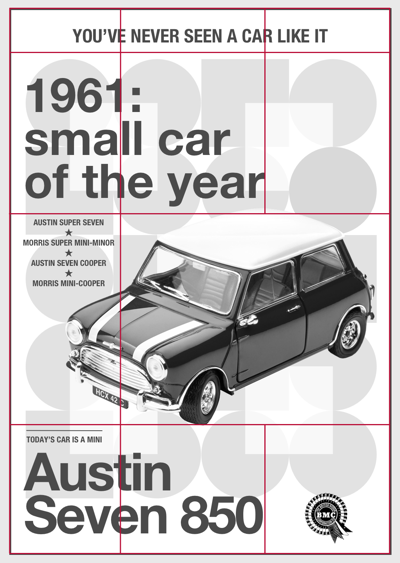

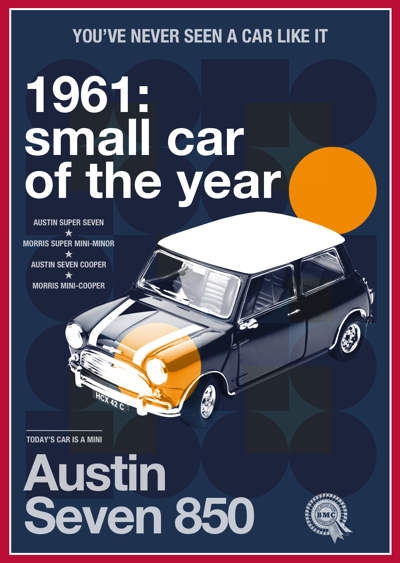

In 2013, Paul Robert Lloyd stepped up to the plate to provide us with the design you see today, which not only subtly shifts colours between each day, but across the years as well. Compare the reds of 2005 to the purples of 2019, and the warm tones of a Day 1 to its correspondingly cool Day 24. It’s a terrific piece of work.

In 2014 we won a Net Award for Best Collaborative Project at a fancy ceremony in London. Many past authors were there, and as it was an aware for our collaborative efforts, we all posed with the glassware for photos.

Looking back, looking forward

But even I, Sea Captain Belly Button am not enough of a navel gazer to just be writing an article just about this website. As we draw our fifteenth and final year to a close, it’s important to reflect on what can be learned. Not from the articles (so much!) or from the folly of committing to a nightly publishing schedule for a month every year for fifteen years (don’t do it!) but from the value in starting something not because you have to, but just because you want to. From scratching an itch. From working with a friend just because you love spending time with them. Or for doing something because you see the opportunity for good.

As web designers and developers, we have the opportunity to turn the skills we use in our profession to so many different purposes. In doing so you never know what good might come from it.

Seeing the good

This week I asked around to find out what good others have seen from their side projects. Long time 24 ways contributor Simon Willison had this to say:

Basically every job I’ve ever had relates back to a side-project in some way

— Simon Willison (@simonw) December 17, 2019

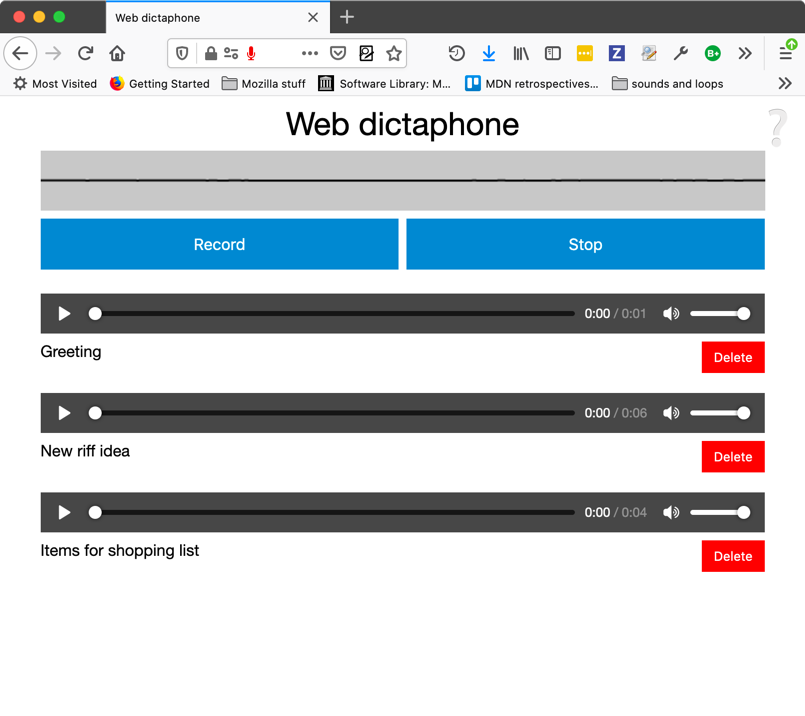

Simon went onto explain how it was a website side project that got him his first job in tech. After that, his personal blog lead him to getting a job at Lawrence Journal-World where he created Django. On his honeymoon, Simon and his new wife (and 24 ways contributor) Natalie Downe created Lanyrd, and Simon’s more recent Datasette project landed him a JSK Fellowship at Stanford. That’s an impressive record of side projects, for sure.

Others had similar stories. My good friend Meri Williams is currently CTO of challenger bank Monzo, as well as being a trustee at Stonewall and Chair of The Lead Developer conference.

I got asked to write the book you tech reviewed off the back of a Meetup talk.

Chairing @TheLeadDev has led to me getting to hire & work with so many new brilliant people, as well as getting me multiple CTO gigs (both perm & interim)Got the gig chairing Lead Dev after meeting @RuthYarnit at a dev meet-up in the basement of a pub in Reading (which I think @drewm you also spoke at / introduced me to!)

— Meri Williams 🌈💪🏾 (@Geek_Manager) December 18, 2019

Leading LGBTQ employee network at P&G eventually led to my applying for board role at Stonewall

Again, an impressive list of achievements, and I’m sure both Simon and Meri would have eventally found other routes to their individual success, but the reality is they did it through side projects. Through being present and active, contributing a little to their communities, and receiving so much more back in return.

Of course, not all projects have to be directly related to the web or software to be fulfilling. Of course they don’t. Mark Small and Jack Shoulder embraced their love of a good rear end and created MuseumBums, informally cataloging perfect posteriors for your perusing pleasure. On its success, Mark says:

Ok! We’ve been profiled in the Cambridge Independent, the Sun and the Metro; raised money for Prostate Cancer UK; created a little community of museum fans who aren’t afraid to be a bit silly online; and we’ve got a list of big ideas for developing our 👍🏛️🍑 offer further! 😁

— Marky Smallstice 🎄👓🌈 (@thehistoryb0y) December 17, 2019

Jack adds:

We’ve also got a shout out on @BBCRadio4 and helped a beloved museum achieve record numbers of visitors.

— Jack Frost (@jackshoulder) December 17, 2019

Wow. It’s been a *year*

I had so many heartwarming responses to my request for stories, I really recommend you go over to the thread on Twitter and read it. It’s been one of my favourite set of replies in a long time.

Focussing on what’s important

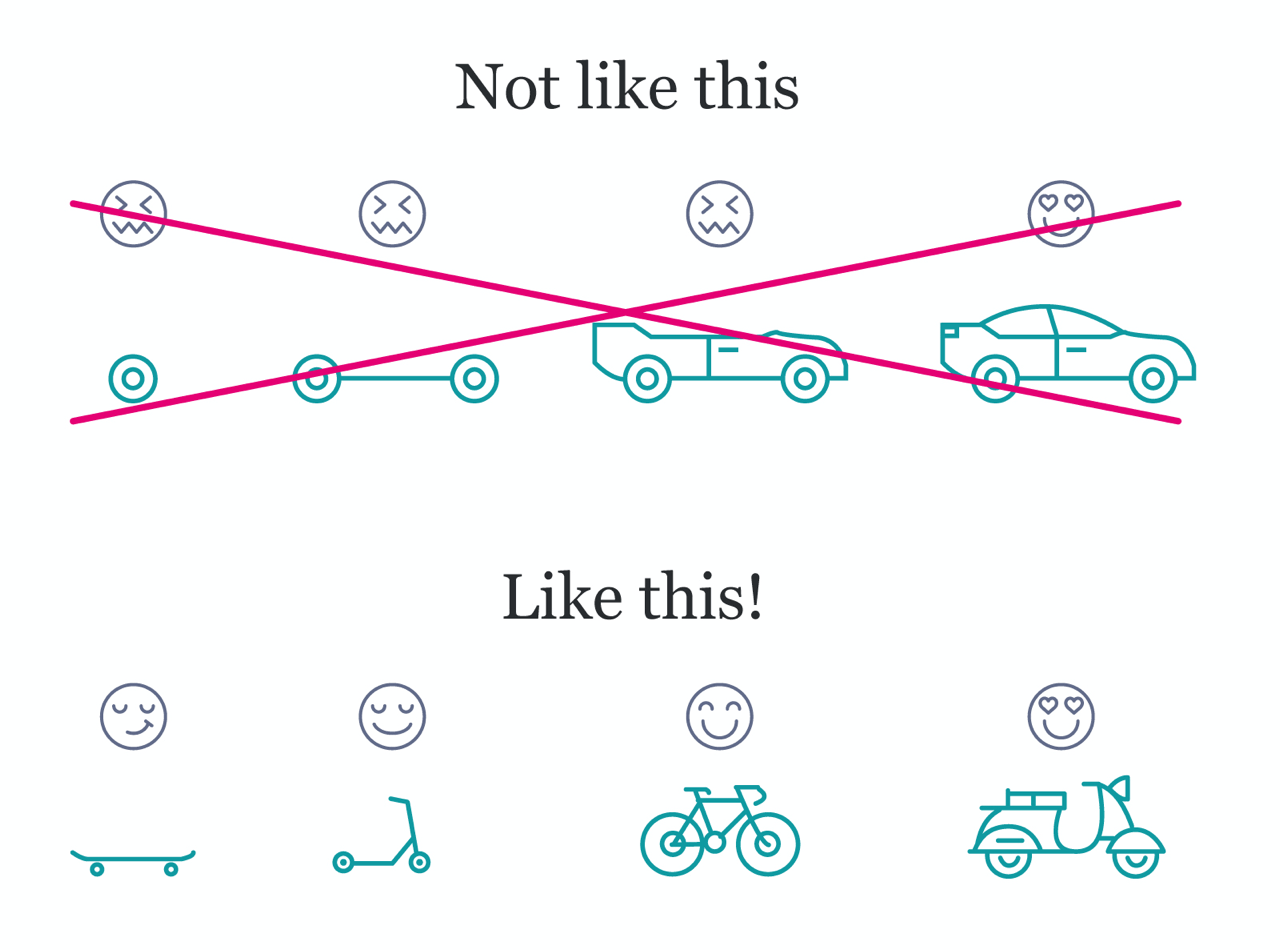

As the years progressed, more and more publications sprang up both at Christmas and throughout the year with how-to articles explaining techniques. As a natural response, 24 ways started mixing up solution-based articles with bigger picture takes on a wider range of topics, but always with a practical takeaway to impress your friends.

After the embarrassment of white dudes that dominated the early years, we actively sought to open the opportunity to write to a wider and more diverse range of experts. While I don’t think we ever got as much racial diversity in our lineup as I would have liked to have achieved, I’m very proud that each season has been closely gender-balanced since 2012. This is something that was never forced or remotely hard to achieve, all it took was an awareness of the potential for bias.

Calling time

With all the benefits that side projects can bring, it’s also important to be mindful of downsides. Not every project will take flight, and those that do can also start to consume valuable time. That’s fine while it’s fun and you’re seeing the benefits, but it’s neither fun or healthy long-term to have no time away from something that might otherwise be your job.

Spending time with family, friends, and loved ones is equally important especially at this time of year. Just as anyone who does a lot of sport or fitness will tell you about the value of rest days between your activities to let the body recover, time away from ‘work’ is important to do the same for your brain.

Having run this site every Christmas for 15 seasons, it’s time to take a breather and give it a rest. Who knows if we might return in the future, but no promises. It’s been a good run, and an absolute privilege to provide this small tradition to the community I love.

So from me and the whole 24 ways family, Happy Christmas to all, and to all a good night.

About the author

Drew McLellan is a developer and content management consultant from Bristol, England. He’s the lead developer for the popular Perch and Perch Runway content management systems, and public speaking portfolio site Notist. Drew was formerly Group Lead at the Web Standards Project, and a Search Innovation engineer at Yahoo!. When not publishing 24 ways, he keeps a personal site about web development, takes photos, tweets a lot and tries to stay upright on his bicycle.